释放双眼,带上耳机,听听看~!

完整报错如下

[root@ceph-01 ~]# ceph -s

cluster:

id: c8ae7537-8693-40df-8943-733f82049642

health: HEALTH_WARN

2 daemons have recently crashed

services:

mon: 3 daemons, quorum ceph-01,ceph-02,ceph-03 (age 24m)

mgr: ceph-03(active, since 2d), standbys: ceph-02, ceph-01

mds: cephfs-abcdocker:1 cephfs:1 i4tfs:1 {cephfs-abcdocker:0=ceph-02=up:active,cephfs:0=ceph-03=up:active,i4tfs:0=ceph-01=up:active}

osd: 5 osds: 5 up (since 24m), 5 in (since 116m)

rgw: 2 daemons active (ceph-01, ceph-02)

task status:

data:

pools: 19 pools, 880 pgs

objects: 10.99k objects, 40 GiB

usage: 126 GiB used, 154 GiB / 280 GiB avail

pgs: 880 active+clean

io:

client: 67 KiB/s rd, 2.0 KiB/s wr, 67 op/s rd, 45 op/s wr

[root@ceph-01 ~]# ceph -s

cluster:

id: c8ae7537-8693-40df-8943-733f82049642

health: HEALTH_WARN

2 daemons have recently crashed

services:

mon: 3 daemons, quorum ceph-01,ceph-02,ceph-03 (age 25m)

mgr: ceph-03(active, since 2d), standbys: ceph-02, ceph-01

mds: cephfs-abcdocker:1 cephfs:1 i4tfs:1 {cephfs-abcdocker:0=ceph-02=up:active,cephfs:0=ceph-03=up:active,i4tfs:0=ceph-01=up:active}

osd: 5 osds: 5 up (since 24m), 5 in (since 116m)

rgw: 2 daemons active (ceph-01, ceph-02)

task status:

data:

pools: 19 pools, 880 pgs

objects: 10.99k objects, 40 GiB

usage: 126 GiB used, 154 GiB / 280 GiB avail

pgs: 880 active+clean

io:

client: 50 KiB/s rd, 18 KiB/s wr, 49 op/s rd, 33 op/s wr查看详细日志信息

[root@ceph-01 ~]# ceph health detail

HEALTH_WARN 2 daemons have recently crashed

RECENT_CRASH 2 daemons have recently crashed

mgr.ceph-03 crashed on host ceph-03 at 2022-12-24 05:05:45.116127Z

mgr.ceph-01 crashed on host ceph-01 at 2022-12-31 03:01:15.555695Zceph 的 crash模块用来收集守护进程出现 crashdumps (崩溃)的信息,并将其存储在ceph集群中,以供以后分析。

我们通过crash查看一下

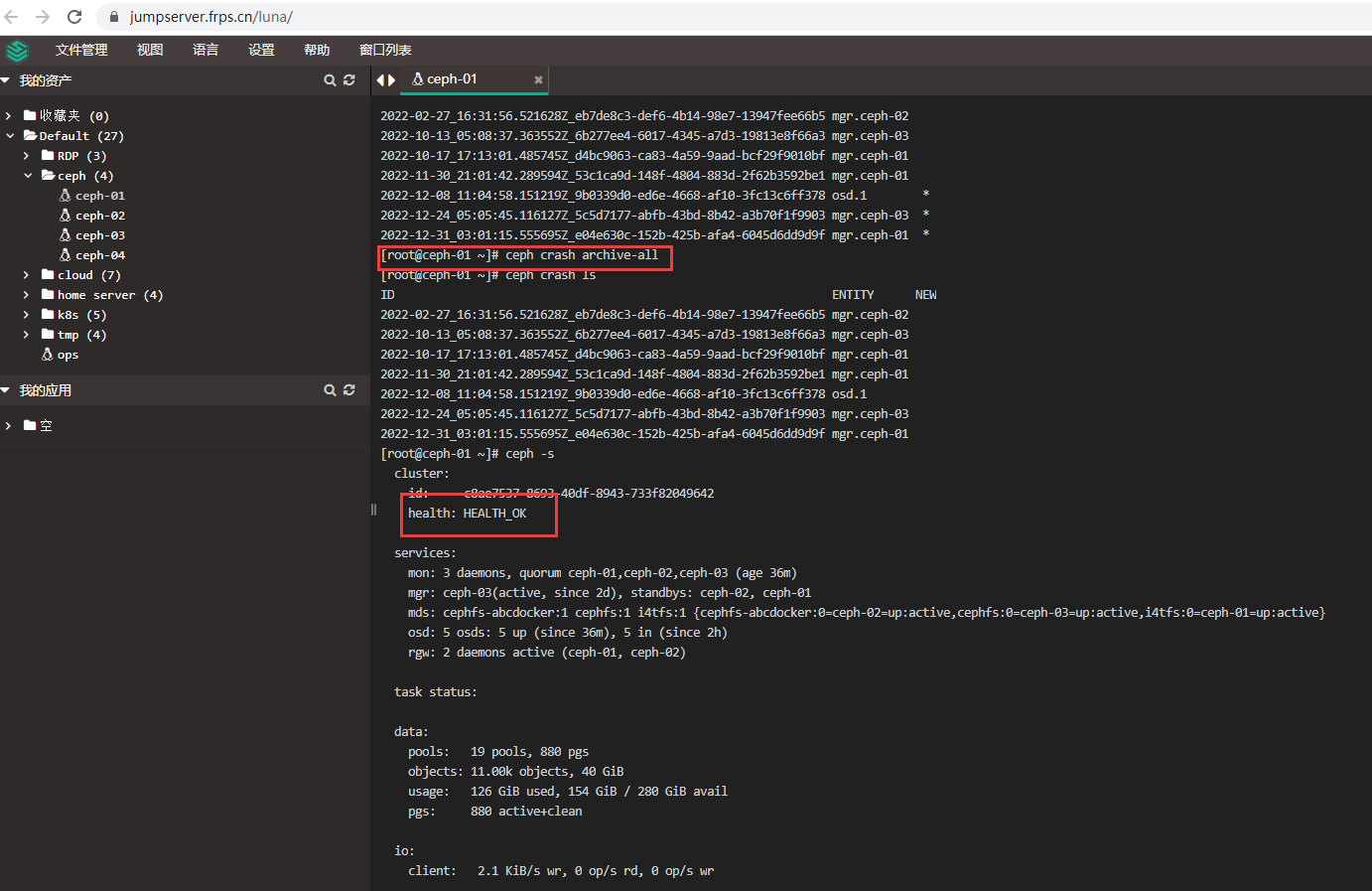

[root@ceph-01 ~]# ceph crash ls

ID ENTITY NEW

2022-02-27_16:31:56.521628Z_eb7de8c3-def6-4b14-98e7-13947fee66b5 mgr.ceph-02

2022-10-13_05:08:37.363552Z_6b277ee4-6017-4345-a7d3-19813e8f66a3 mgr.ceph-03

2022-10-17_17:13:01.485745Z_d4bc9063-ca83-4a59-9aad-bcf29f9010bf mgr.ceph-01

2022-11-30_21:01:42.289594Z_53c1ca9d-148f-4804-883d-2f62b3592be1 mgr.ceph-01

2022-12-08_11:04:58.151219Z_9b0339d0-ed6e-4668-af10-3fc13c6ff378 osd.1 *

2022-12-24_05:05:45.116127Z_5c5d7177-abfb-43bd-8b42-a3b70f1f9903 mgr.ceph-03 *

2022-12-31_03:01:15.555695Z_e04e630c-152b-425b-afa4-6045d6dd9d9f mgr.ceph-01 *

#带*号表示为最新,我们可以看到山面说mgr和osd有异常信息,接下来排查下osd和mgr,看看是不是因为没有归档的原因造成

[root@ceph-01 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.27338 root default

-3 0.07809 host ceph-01

0 hdd 0.04880 osd.0 up 1.00000 1.00000

3 hdd 0.02930 osd.3 up 1.00000 1.00000

-5 0.04880 host ceph-02

1 hdd 0.04880 osd.1 up 1.00000 1.00000

-7 0.04880 host ceph-03

2 hdd 0.04880 osd.2 up 1.00000 1.00000

-9 0.09769 host ceph-04

4 hdd 0.09769 osd.4 up 1.00000 1.00000

[root@ceph-01 ~]# ceph -s |grep mgr

mgr: ceph-03(active, since 2d), standbys: ceph-02, ceph-01

通过上面的命令,我们排查到集群状态是ok的,那么现在就是因为crash没有归档,造成误报,接下来进行归档

#第一种方法,适合只有一两个没有归档的

#ceph crash ls

#ceph crash archive <id>

#第二种方法,适合多个归档异常的,我们这边直接执行下面的命令

#ceph crash archive-all